A New System Can Measure the Hidden Bias in Otherwise Secret Algorithms

A powerful tool for algorithmic transparency

Russell Brandom | May 25, 2016

|

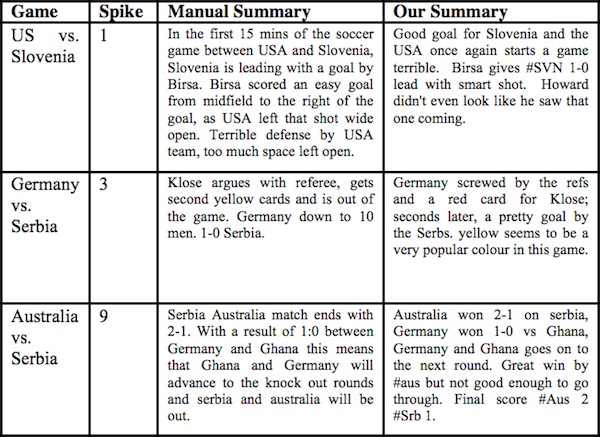

| "The IBM system highlights a particular bias that can creep into algorithms though: Any bias in the data fed into the algorithm gets carried through to the output of the system. ...The implications are fairly straightforward: If Slovenia scores on a controversial play against the U.S., the algorithm might output “The U.S. got robbed” if that’s the predominant response in the English tweets. But presumably that’s not what the Slovenians tweeting about the event think about the play. It’s probably something more like, “Great play — take that U.S.!”" Source: http://www.niemanlab.org/2012/12/nick-diakopoulos-understanding-bias-in-computational-news-media/ |

<more at http://www.theverge.com/2016/5/25/11773108/research-method-measure-algorithm-bias; related articles and links: http://www.theatlantic.com/business/archive/2015/09/discrimination-algorithms-disparate-impact/403969/ (When Discrimination Is Baked Into Algorithms

As more companies and services use data to target individuals, those analytics could inadvertently amplify bias. September 6, 2015) and https://www.technologyreview.com/s/510646/racism-is-poisoning-online-ad-delivery-says-harvard-professor/ (Racism is Poisoning Online Ad Delivery, Says Harvard Professor. Google searches involving black-sounding names are more likely to serve up ads suggestive of a criminal record than white-sounding names, says computer scientist. February 4, 2013)>

No comments:

Post a Comment